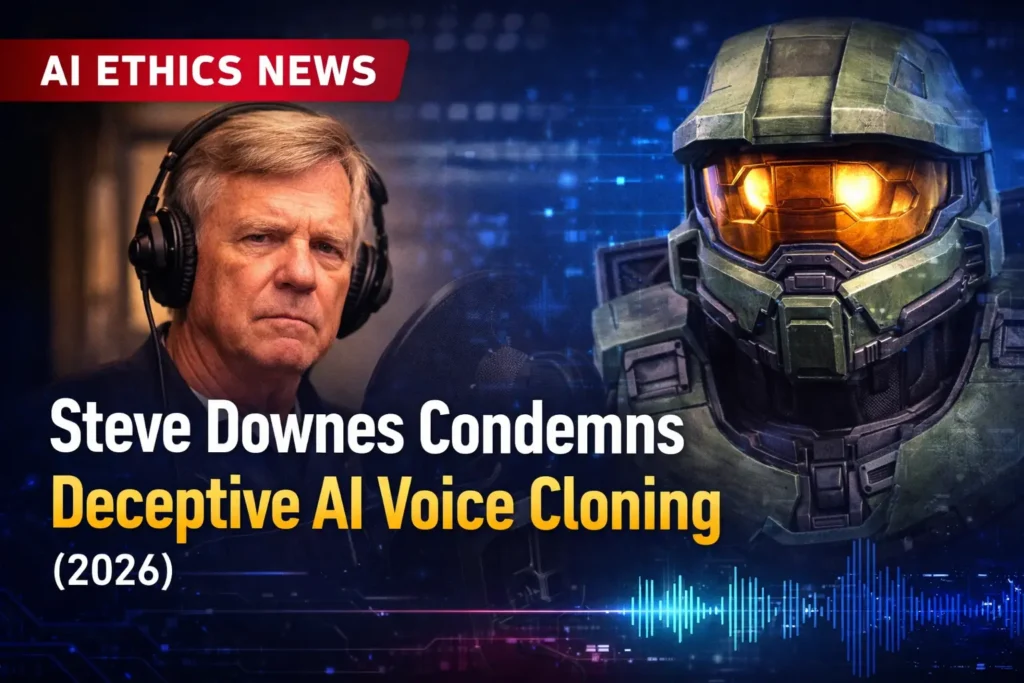

Steve Downes, the iconic voice behind Halo’s Master Chief, has entered the 2026 AI ethics debate with a clear warning: unauthorized, hyper-realistic AI voice cloning risks crossing from experimentation into deception.

In a recent YouTube AMA, Downes criticized the growing use of AI systems that replicate recognizable voices without permission, arguing that such practices mislead audiences and undermine the livelihoods of professional voice actors. While acknowledging that AI can be a powerful creative tool, he cautioned that the technology’s realism now demands stricter ethical and legal boundaries.

What is Steve Downes’ stance on AI voice cloning?

Steve Downes opposes unauthorized AI voice cloning, contending that it violates ethical standards by misleading listeners and misrepresenting performances. He has warned that, in 2026, AI voice technology will have reached what he describes as an “indistinguishable threshold,” increasing the risk of fraud, reputational harm, and loss of work for professional actors across entertainment and voice-driven industries.

The “Deception” Threshold

During the AMA, Downes underscored that while early fan projects and obvious parodies were largely harmless, recent advancements have blurred the distinction between authentic human performance and synthetic reproduction.

The Problem of Misattribution

“When you get to the AI part and deceive somebody into thinking these are the lines that I actually spoke when they’re not—that’s when we cross a line,” Downes explained. Downes expresses concern about audiences mistakenly believing a performer has endorsed or delivered content they never recorded.

Impact on Professional Work

Downes’ comments echo warnings from other prominent voice actors, including Ashly Burch and Neil Newbon, who have raised concerns that unauthorized AI mimicry could divert work away from human performers and erode creative ownership.

2026: Approaching the “Indistinguishable” Era

Downes’ remarks align with a broader industry concern: AI voice systems are becoming sufficiently realistic that average listeners may struggle to tell the difference between genuine and synthetic speech.

Rising Fraud and Abuse Risks

Security researchers and consumer protection groups have reported a sharp increase in AI-assisted scam and impersonation calls, with some victims receiving multiple fraudulent calls per day. While exact volumes vary by region, the trend has intensified calls for stronger safeguards around voice authenticity.

The “Darth Vader” Precedent

Downes also referenced recent controversies involving AI-generated voices of iconic characters—such as incidents tied to Darth Vader in promotional content connected to Fortnite—which sparked public debate over brand control, character integrity, and consent. These cases illustrate how even well-intentioned uses can damage long-standing creative legacies if guardrails are unclear.

The Legal Landscape: California’s 2026 Protections

Downes’ comments come amid significant legal developments aimed at protecting digital likeness and voice rights.

- AB 1836 (Effective January 1, 2026): This California law significantly restricts the creation and distribution of digital replicas of deceased personalities without authorization from their estates, reinforcing posthumous right-of-publicity protections.

-

AB 2602 (Effective January 1, 2025): This statute limits the enforceability of contract provisions that allow the creation of a digital double to replace a performer’s work without reasonably specific and informed consent.

Together, these measures signal a shift toward stronger protections for performers as AI replication capabilities mature. Legal interpretations will continue to evolve through enforcement and case law.

Strategic Analysis: Trust as the New Moat

For businesses using AI-powered voice systems—including AI outbound calling—Downes’ stance underscores a critical lesson for 2026: trust and transparency are now competitive advantages.

| Feature | Deceptive “Shadow” AI | Authentic “Agentic” AI |

|---|---|---|

| Disclosure | Hidden or unclear disclosure poses a high risk. | Clear disclosure is required in many jurisdictions and helps build trust. |

| Performance Model | Human mimicry | Intent-driven, task-focused assistance |

| Legal Exposure | Vulnerable to right-of-publicity claims | Consent-verified and compliance-aligned |

As public awareness of AI deception grows, organizations that prioritize disclosure and consent are better positioned to avoid legal exposure and reputational harm.

Key Takeaways

- Transparency Is Mandatory: People increasingly view deceiving listeners into believing an AI voice is human as both unethical and legally risky.

- Identity Protection Is Rising: 2026 marks a pivotal year for right-of-publicity and digital-likeness enforcement.

- Human Judgment Still Matters: In high-trust contexts—such as entertainment, sales, and negotiations—human performance and decision-making remain central, even as AI handles assistive and clerical tasks.

Read more: FCC Faces Pressure to Accelerate NextGen TV Transition as NAB Pushes for ATSC 1.0 Sunset

Frequently Asked Questions (FAQ)

Is it illegal to clone a voice actor’s voice with AI in 2026?

Many jurisdictions, including California, are increasingly restricting the use of a highly realistic digital representation of a person’s voice for commercial purposes without explicit, informed consent. Right-of-publicity, unfair competition, and fraud frameworks commonly challenge such practices, with enforcement expanding following the 2025–2026 labor and legislative developments.

How does this affect AI outbound sales and calling?

It reinforces the need for technical transparency. As consumers grow more skeptical of synthetic voices, businesses must clearly disclose AI use in calling scripts and avoid impersonation. Transparent, consent-based AI calling helps differentiate legitimate operators from fraudsters.

Will Steve Downes appear in the Halo: Campaign Evolved remake?

Yes. In the same AMA, Downes confirmed his participation in the upcoming remake, ensuring that Master Chief remains a distinctly human-performed role rather than an algorithmic reproduction.